Entrenamiento ingenuo de modelos en sklearn con MLflow#

Ultima modificación: Mayo 14, 2022

https://www.mlflow.org/docs/latest/quickstart.html

Carga de datos#

[1]:

def load_data():

import pandas as pd

url = "http://archive.ics.uci.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv"

df = pd.read_csv(url, sep=";")

y = df["quality"]

x = df.copy()

x.pop("quality")

return x, y

Particionamiento de los datos#

[2]:

def make_train_test_split(x, y):

from sklearn.model_selection import train_test_split

(x_train, x_test, y_train, y_test) = train_test_split(

x,

y,

test_size=0.25,

random_state=123456,

)

return x_train, x_test, y_train, y_test

Cálculo de métricas de evaluación#

[3]:

def eval_metrics(y_true, y_pred):

from sklearn.metrics import mean_absolute_error, mean_squared_error, r2_score

mse = mean_squared_error(y_true, y_pred)

mae = mean_absolute_error(y_true, y_pred)

r2 = r2_score(y_true, y_pred)

return mse, mae, r2

Reporte de métricas de evaluación#

[4]:

def report(estimator, mse, mae, r2):

print(estimator, ":", sep="")

print(f" MSE: {mse}")

print(f" MAE: {mae}")

print(f" R2: {r2}")

Almacenamiento del modelo#

[5]:

# -----------------------------------------------------------------------------

# Ya no se requiere con MLflow

# -----------------------------------------------------------------------------

#

# def save_best_estimator(# estimator):

#

# import os

# import pickle

#

# if not os.path.exists("models"):

# os.makedirs("models")

# with open("models/estimator.pickle", "wb") as file:

# pickle.dump(estimator, file)

#

Carga del modelo#

[6]:

# -----------------------------------------------------------------------------

# Ya no se requiere con MLflow

# -----------------------------------------------------------------------------

#

# def load_best_estimator():

#

# import os

# import pickle

#

# if not os.path.exists("models"):

# return None

# with open("models/estimator.pickle", "rb") as file:

# estimator = pickle.load(file)

#

# return estimator

#

Entrenamiento#

[7]:

def train_estimator(alpha=0.5, l1_ratio=0.5, verbose=1):

import mlflow.sklearn

from sklearn.linear_model import ElasticNet

import mlflow

x, y = load_data()

x_train, x_test, y_train, y_test = make_train_test_split(x, y)

print('Tracking directory:', mlflow.get_tracking_uri())

with mlflow.start_run():

estimator = ElasticNet(alpha=alpha, l1_ratio=l1_ratio, random_state=12345)

estimator.fit(x_train, y_train)

mse, mae, r2 = eval_metrics(y_test, y_pred=estimator.predict(x_test))

if verbose > 0:

report(estimator, mse, mae, r2)

#

# Tracking de parámetros

#

mlflow.log_param("alpha", alpha)

mlflow.log_param("l1_ratio", l1_ratio)

#

# Tracking de metricas

#

mlflow.log_metric("mse", mse)

mlflow.log_metric("mae", mae)

mlflow.log_metric("r2", r2)

#

# Tracking del modelo

#

mlflow.sklearn.log_model(estimator, "model")

# -------------------------------------------------------------------------

# Ya no se requiere con MLflow

# -------------------------------------------------------------------------

#

# best_estimator = load_best_estimator()

# if best_estimator is None or estimator.score(x_test, y_test) > best_estimator.score(

# x_test, y_test

# ):

# best_estimator = estimator

#

# save_best_estimator(best_estimator)

Búsqueda manual de los mejores hiperparámetros#

[8]:

train_estimator(0.5, 0.5)

Tracking directory: file:///workspace/mlflow/mlruns

ElasticNet(alpha=0.5, random_state=12345):

MSE: 0.6349429447805036

MAE: 0.6453803508338732

R2: 0.0890018368226928

[9]:

train_estimator(0.2, 0.2)

Tracking directory: file:///workspace/mlflow/mlruns

ElasticNet(alpha=0.2, l1_ratio=0.2, random_state=12345):

MSE: 0.5170837474931838

MAE: 0.5701436798648394

R2: 0.2581028767270219

[10]:

train_estimator(0.1, 0.1)

Tracking directory: file:///workspace/mlflow/mlruns

ElasticNet(alpha=0.1, l1_ratio=0.1, random_state=12345):

MSE: 0.489021012335199

MAE: 0.551252749110561

R2: 0.29836649473051535

[11]:

train_estimator(0.1, 0.05)

Tracking directory: file:///workspace/mlflow/mlruns

ElasticNet(alpha=0.1, l1_ratio=0.05, random_state=12345):

MSE: 0.48683363717622585

MAE: 0.5493759222336462

R2: 0.30150487868829456

[12]:

train_estimator(0.3, 0.2)

Tracking directory: file:///workspace/mlflow/mlruns

ElasticNet(alpha=0.3, l1_ratio=0.2, random_state=12345):

MSE: 0.5322180010211477

MAE: 0.5793993870194708

R2: 0.23638867818623654

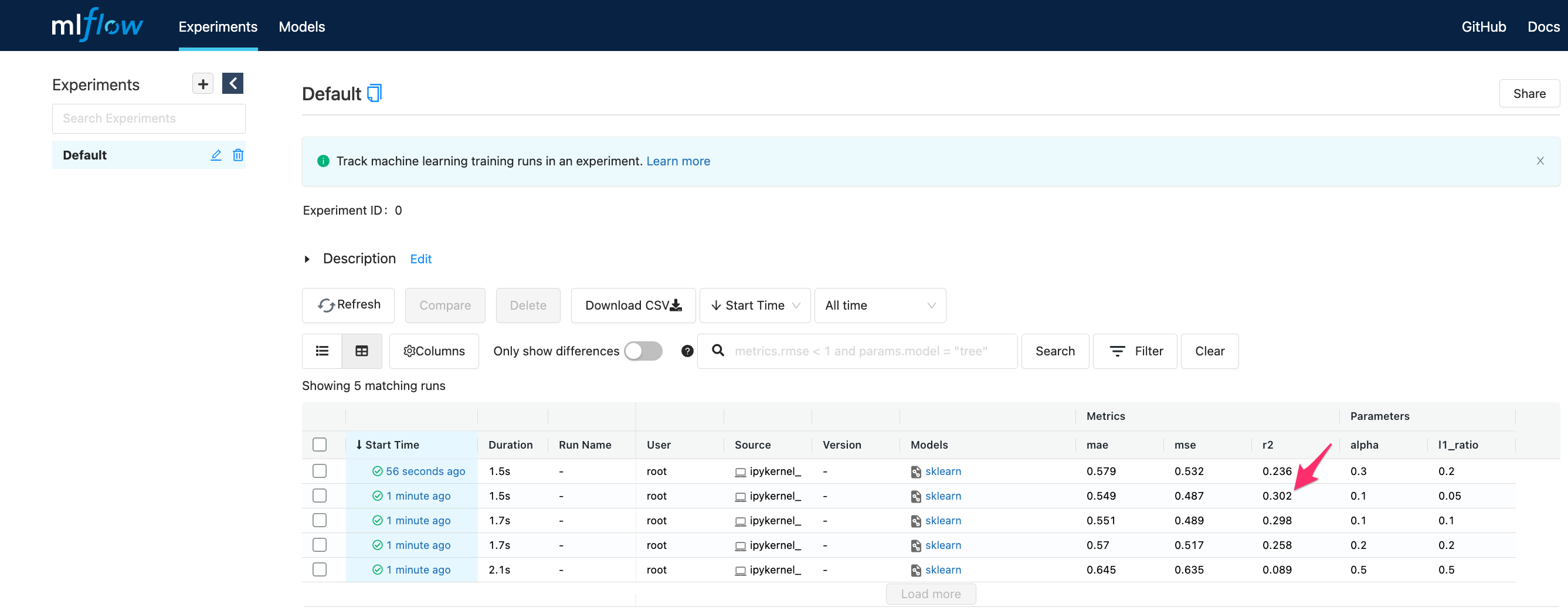

Comparación entre modelos usando MLflow#

Para visualizar la interfase use:

mlflow ui

Nota: En docker usar:

mlflow ui --host 0.0.0.0

con:

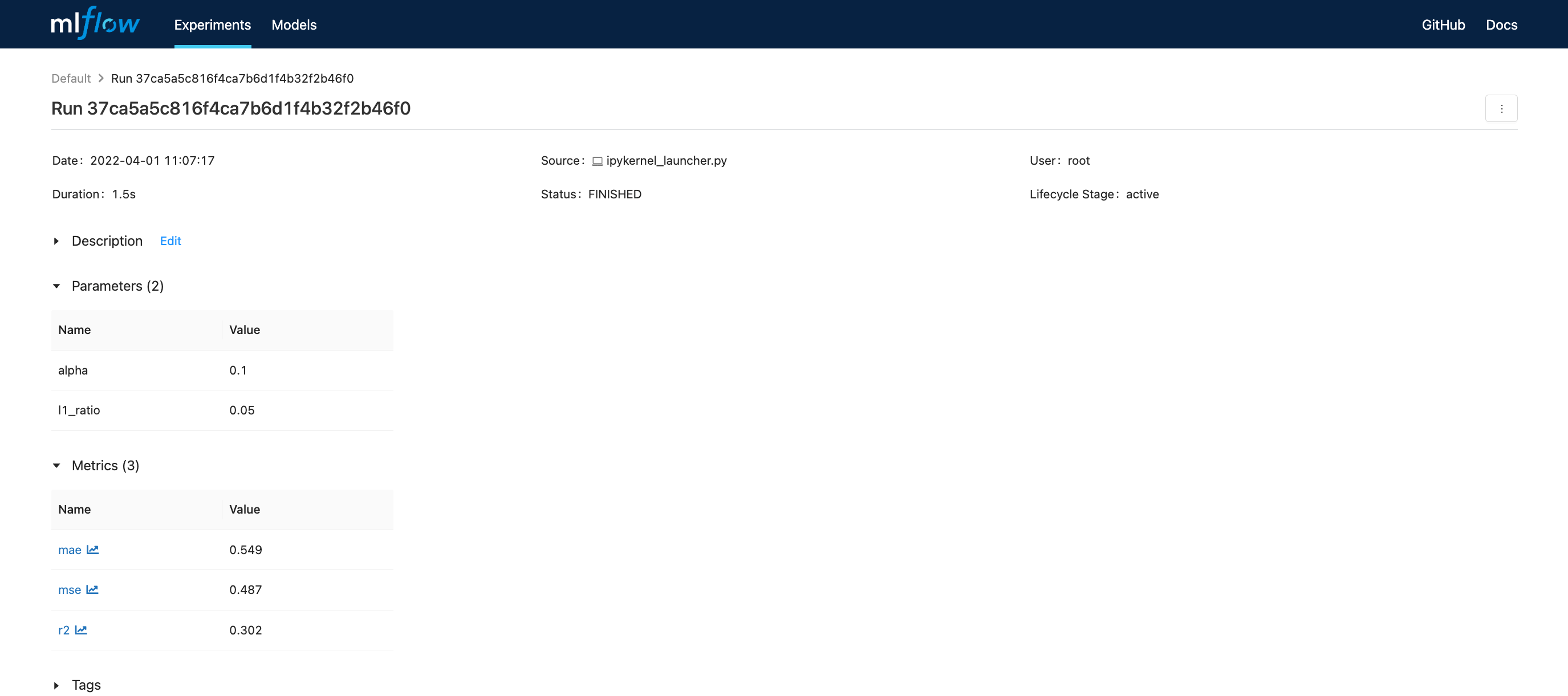

Hacer doble click en el modelo (Columna ‘Start Time’)

Chequeo#

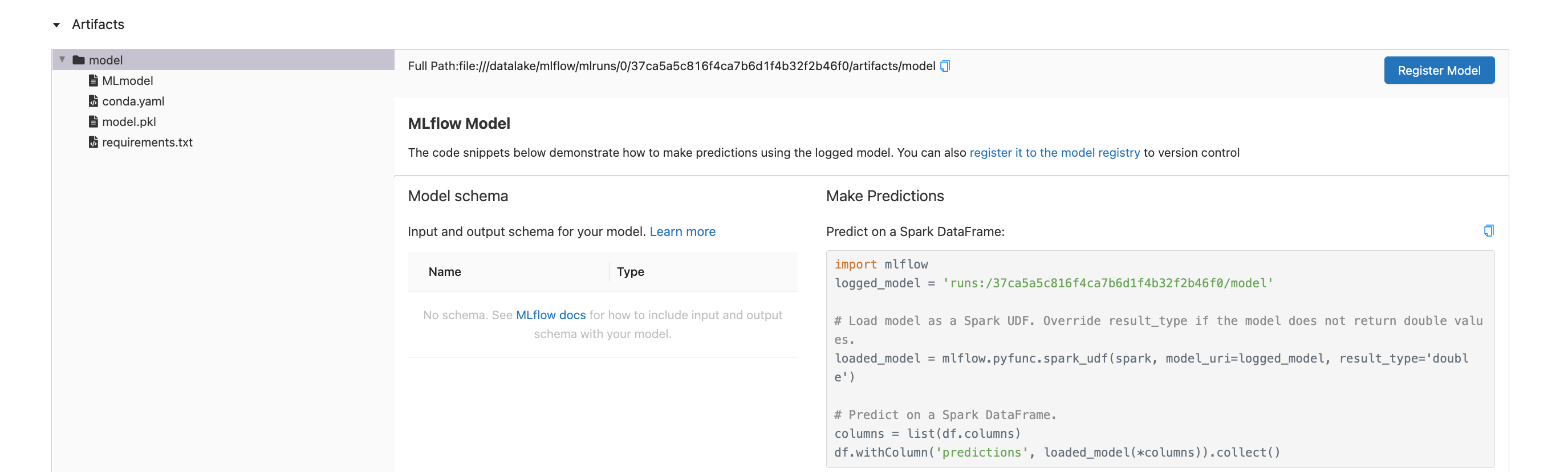

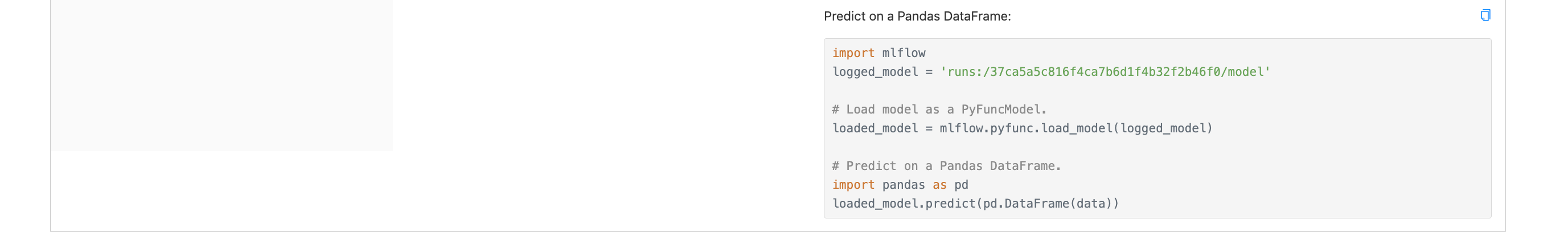

[ ]:

def check_estimator():

import mlflow

x, y = load_data()

x_train, x_test, y_train, y_test = make_train_test_split(x, y)

# NOTA: este parámetro es copiado directamente de la interfase de MLflow

estimator_path = "runs:/37ca5a5c816f4ca7b6d1f4b32f2b46f0/model"

estimator = mlflow.pyfunc.load_model(estimator_path)

mse, mae, r2 = eval_metrics(y_test, y_pred=estimator.predict(x_test))

report(estimator, mse, mae, r2)

#

# Debe coincidir con el mejor modelo encontrado en la celdas anteriores

#

check_estimator(estimator_path=os.listdir('mlruns/0/')[0])

[14]:

%%bash

rm -rf outputs mlruns models