Aprendizaje no supervizado#

Ultima modificación: 2024-01-22 | YouTube

[1]:

import numpy as np

np.random.seed(1)

[2]:

#

# K-means clustering

#

from sklearn import cluster, datasets

X_iris, y_iris = datasets.load_iris(return_X_y=True)

k_means = cluster.KMeans(n_clusters=3)

k_means.fit(X_iris)

display(k_means.labels_[::10])

display(y_iris[::10])

array([1, 1, 1, 1, 1, 2, 0, 0, 0, 0, 2, 2, 2, 2, 2], dtype=int32)

array([0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2])

[3]:

#

# Vector quantization

#

import scipy as sp

try:

face = sp.face(gray=True)

except AttributeError:

from scipy import misc

face = misc.face(gray=True)

# Transforma los datos a un array de (n_sample, n_feature)

X = face.reshape((-1, 1))

k_means = cluster.KMeans(

n_clusters=5,

n_init=1, # número de veces que el algoritmo corre con diferentes semillas para los centros

)

k_means.fit(X)

values = k_means.cluster_centers_.squeeze()

labels = k_means.labels_

face_compressed = np.choose(labels, values)

face_compressed.shape = face.shape

/var/folders/r8/qqz25js92db4032b_bt3zh380000gn/T/ipykernel_7630/3867241333.py:11: DeprecationWarning: scipy.misc.face has been deprecated in SciPy v1.10.0; and will be completely removed in SciPy v1.12.0. Dataset methods have moved into the scipy.datasets module. Use scipy.datasets.face instead.

face = misc.face(gray=True)

[4]:

#

# Hierarchical agglomerative clustering

#

from scipy.ndimage import gaussian_filter

from skimage.data import coins

from skimage.transform import rescale

rescaled_coins = rescale(

gaussian_filter(coins(), sigma=2),

0.2,

mode="reflect",

anti_aliasing=False,

)

X = np.reshape(rescaled_coins, (-1, 1))

[5]:

from sklearn.feature_extraction import grid_to_graph

connectivity = grid_to_graph(*rescaled_coins.shape)

[6]:

n_clusters = 27 # number of regions

from sklearn.cluster import AgglomerativeClustering

ward = AgglomerativeClustering(

n_clusters=n_clusters,

linkage="ward",

connectivity=connectivity,

)

ward.fit(X)

label = np.reshape(ward.labels_, rescaled_coins.shape)

label[:10]

[6]:

array([[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2],

[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2],

[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2,

2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 2, 2, 2],

[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2,

2, 15, 15, 15, 15, 2, 2, 2, 2, 2, 2, 2, 2],

[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 1, 1, 1, 1,

1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

15, 15, 15, 15, 15, 15, 15, 2, 2, 2, 2, 2, 2],

[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 2, 2, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2,

1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 15,

15, 15, 15, 15, 15, 15, 15, 15, 2, 2, 2, 2, 2],

[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 12, 12, 12,

12, 12, 2, 2, 1, 1, 2, 2, 2, 17, 17, 17, 17, 17, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 15, 15,

15, 15, 15, 15, 15, 15, 15, 15, 15, 2, 2, 2, 2],

[ 1, 1, 1, 1, 1, 1, 1, 26, 26, 26, 26, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 12, 12, 12, 12,

12, 12, 12, 2, 2, 2, 2, 2, 17, 17, 17, 17, 17, 17, 17, 2,

2, 2, 2, 2, 2, 10, 10, 10, 10, 10, 2, 2, 2, 15, 15, 15,

15, 15, 15, 15, 15, 15, 15, 15, 15, 2, 2, 2, 2],

[ 1, 1, 1, 1, 1, 1, 26, 26, 26, 26, 26, 26, 1, 1, 1, 1,

1, 1, 24, 24, 24, 24, 1, 1, 1, 1, 1, 12, 12, 12, 12, 12,

12, 12, 12, 12, 2, 2, 2, 17, 17, 17, 17, 17, 17, 17, 17, 17,

2, 2, 2, 2, 10, 10, 10, 10, 10, 10, 10, 2, 2, 15, 15, 15,

15, 15, 15, 15, 15, 15, 15, 15, 15, 2, 2, 2, 2],

[ 1, 1, 1, 1, 1, 26, 26, 26, 26, 26, 26, 26, 26, 1, 1, 1,

1, 24, 24, 24, 24, 24, 24, 24, 1, 1, 12, 12, 12, 12, 12, 12,

12, 12, 12, 12, 2, 2, 2, 17, 17, 17, 17, 17, 17, 17, 17, 17,

2, 2, 2, 2, 10, 10, 10, 10, 10, 10, 10, 10, 2, 15, 15, 15,

15, 15, 15, 15, 15, 15, 15, 15, 15, 2, 2, 2, 2]])

[7]:

#

# Feature agglomeration

#

digits = datasets.load_digits()

images = digits.images

X = np.reshape(images, (len(images), -1))

connectivity = grid_to_graph(*images[0].shape)

agglo = cluster.FeatureAgglomeration(

connectivity=connectivity,

n_clusters=32,

)

agglo.fit(X)

X_reduced = agglo.transform(X)

X_approx = agglo.inverse_transform(X_reduced)

images_approx = np.reshape(X_approx, images.shape)

Decompositions#

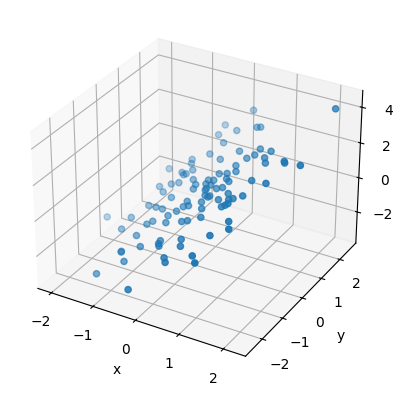

[8]:

# Crea una señal con dos variables informativas

x1 = np.random.normal(size=(100, 1))

x2 = np.random.normal(size=(100, 1))

x3 = x1 + x2

X = np.concatenate([x1, x2, x3], axis=1)

[9]:

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111, projection="3d")

ax.scatter(X[:, 0], X[:, 1], X[:, 2])

_ = ax.set(xlabel="x", ylabel="y", zlabel="z")

[10]:

#

# Principal component analysis (PCA)

#

from sklearn import decomposition

pca = decomposition.PCA()

pca.fit(X)

print(pca.explained_variance_)

[3.07269308e+00 6.35114032e-01 3.84346078e-32]

[11]:

pca.set_params(n_components=2)

X_reduced = pca.fit_transform(X)

X_reduced.shape

[11]:

(100, 2)

[12]:

#

# Independent Component Analysis: ICA

#

# Generate sample data

import numpy as np

from scipy import signal

time = np.linspace(0, 10, 2000)

s1 = np.sin(2 * time) # Signal 1 : sinusoidal signal

s2 = np.sign(np.sin(3 * time)) # Signal 2 : square signal

s3 = signal.sawtooth(2 * np.pi * time) # Signal 3: saw tooth signal

S = np.c_[s1, s2, s3]

S += 0.2 * np.random.normal(size=S.shape) # Add noise

S /= S.std(axis=0) # Standardize data

# Mix data

A = np.array([[1, 1, 1], [0.5, 2, 1], [1.5, 1, 2]]) # Mixing matrix

X = np.dot(S, A.T) # Generate observations

[13]:

# Computa ICA

# Este método separa una señal multivariada en subcomponentes aditivas

# maximizando la independencia entre ellas. Usualmente se usa para

# separa señales superpuestas

ica = decomposition.FastICA()

S_ = ica.fit_transform(X) # Get the estimated sources

A_ = ica.mixing_.T

np.allclose(X, np.dot(S_, A_) + ica.mean_)

[13]:

True